Notes on Reinforcement Learning 20

Inverse RL to exploit vulnerabilities

Good morning everyone!

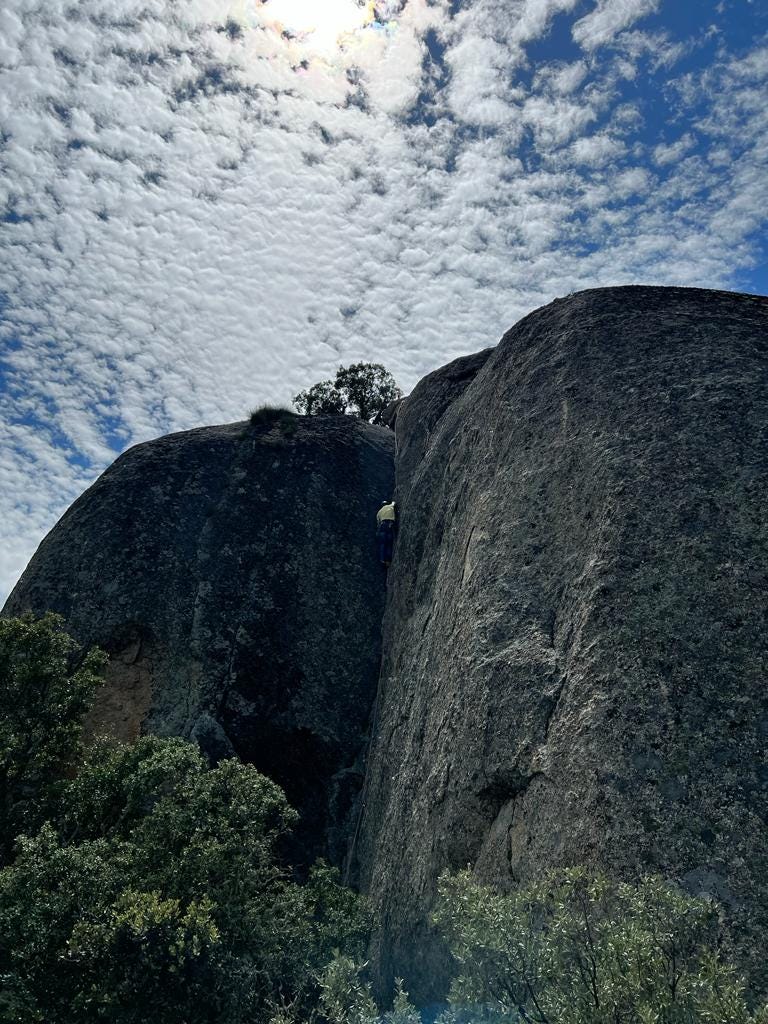

After a very successful crack-climbing Saturday and a very painful Sunday aftermath, I write to you this Monday to update you a bit on how my thesis is going and send you the links for all the interesting stuff that was published on RL.

On my thesis: I’m now fully a doctoral student, and in the next months I’ll be selecting my subject of study. Had a meeting last week and what I’ll be researching for now will be adversarial examples and inverse reinforcement learning, for a very cool application, the exploration of vulnerabilities in machine learning models.

The things I like about this are that it is very transversal and with a wide horizon of future opportunities.

For some examples of this kind of research, I can recommend the following papers:

SneakyPrompt: Evaluating Robustness of Text-to-image Generative Models' Safety Filters

PatchAttack: A Black-box Texture-based Attack with Reinforcement Learning

Adversarial Machine Learning Applied to Intrusion and Malware Scenarios: A Systematic Review

And on this week’s classification of articles:

You may receive some summaries of these papers in the following newsletters.

Also, I’m writing my second article (I’ll present my first one this July at the 9th International Conference on Time Series and Forecasting in Gran Canaria), so that’s very exciting.

Classification of articles on arxiv.org (May 29th - June 4th)

Most interesting papers

A Modular Test Bed for Reinforcement Learning Incorporationo Industrial Applications

Simulation and Retargeting of Complex Multi-Charactereractions

Hyperparameters in Reinforcement Learning and How To Tune Them

Symmetric Exploration in Combinatorial Optimization is Free!

Interpretable and Explainable Logical Policies via Neurally Guided Symbolic Abstraction

Hierarchical Reinforcement Learning for Modeling User Novelty-Seekingent in Recommender Systems

Identifiability and Generalizability in Constrained Inverse Reinforcement Learning

PAGAR: Imitation Learning with Protagonist Antagonist Guided Adversarial Reward

Offline Meta Reinforcement Learning with In-Distribution Online Adaptation

Diffusion Model is an Effective Planner and Data Synthesizer for Multi-Task Reinforcement Learning

Safe Offline Reinforcement Learning with Real-Time Budget Constraints

Direct Preference Optimization: Your Language Model is Secretly a Reward Model

Factually Consistent Summarization via Reinforcement Learning with Textual Entailment Feedback

All papers classified

Engineering Applications

Computing and Software Engineering

Energy

Image & Video

Industrial Applications

Navigation

Networks

Traffic & Flow

Robotics

UAV's

Others

Reinforcement Learning Theory

Actor-Critic

Algorithms

Computational Efficiency

Continual learning

Control Theory

Empirical Study of RL

Exploration Methods

Explainable/Interpretable Machine Learning

Federated Learning

Graph RL

Hierarchical RL

Imitation / Inverse / Demonstration Reinforcement Learning

Markov Decision Processes / Deep Theory

Meta-Learning

Model-based

Modular RL

Multi-Agent RL

Multi-Task RL

Offline RL

A Convex Relaxation Approach to Bayesian Regret Minimization in Offline Bandits

Delphic Offline Reinforcement Learning under Nonidentifiable Hidden Confounding

Efficient Diffusion Policies for Offline Reinforcement Learning

Improving and Benchmarking Offline Reinforcement Learning Algorithms

MetaDiffuser: Diffusion Model as Conditional Planner for Offline Meta-RL

Quality Diverse RL

Quantum Computing

Reinforcement Learning from Human Preferences/Feedback

Representation Learning

Risk-sensitive/safe/constrained RL

Robust RL

Sim-to-real

Transformers

Vision-Language Models

Visual RL

Others

Recommender Systems

Games and Game Theory

Philosophy and Ethics

Healthcare Applications

Natural Language Processing

Adapting Pre-trained Language Models to Vision-Language Tasks via Dynamic Visual Prompting

Adversarial learning of neural user simulators for dialogue policy optimisation

Direct Preference Optimization: Your Language Model is Secretly a Reward Model

Factually Consistent Summarization via Reinforcement Learning with Textual Entailment Feedback

Thought Cloning: Learning to Think while Acting by Imitating Human Thinking

See you next week!!